Depth ƒx

Lytro, Inc.

Architecture + UX + UI .:. 2015

Background

Depth ƒx is an approach to image manipulation using lightfield generated depth maps to alter the colorspace and exposure of an image. Given the availability of the depth map used for interactive image re-focus, I proposed a practical solution using the depth map combined with traditional 2D masking techniques, to isolate regions of the image for color space manipulation. The result is a novel approach to enable the user to quickly alter composition in a lightfield image by manipulation of the tonality within a region.

Architecture

We approached the task of manipulating the image using a similar construct to how typical compositing applications handle the task: LAYERS and GROUPS.

Based on this premise, we devised a workflow relying on:

- Filter Containers to hold filters within a group and perform an ordered and logical set of image manipulation tasks.

- Relying on the principles of layered image stacks, a strict ordered evaluation structure for image operations would flow from bottom (input) to top (output) for consistent and predictable image manipulation by the user.

- Filter containers would include masking operations in order to isolate a region (or regions) which would be affected by the filters within the container;

- zDepth as a mask type would be a key component of the masking techniques in the system, which are unique to lightfield image capture.

By basing all of the masks on procedural primitives and relative zDepth data, our method creates other advantages for faster workflows. For example: sharing a common technique for image manipulation between images; saving one “look” and re-using it on other images, etc.

Workflow Concept Development

We set out to evaluate how the data flow could translate into an understandable, practical system for the user. After exploring the possibilities for structured image manipulation, we came to the conclusion that the data flow of the processing model could be the foundation for the resulting UI of stacked filter operations. I then crafted several diagrams to illustrate the concepts and validate their efficacy with engineering, and explain the workflow to Product Management and key stakeholders:

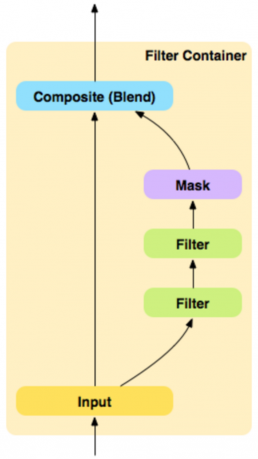

After initial architecture ideation with engineering, I worked out basic flow diagrams to show the flow of image data in the Depth ƒx processing stack. In this example image data flows from bottom to top. The data flows from the previous step in the evaluation, through filters within the shown filter container and is masked and composited with the result of the previous data prior to the current filter container.

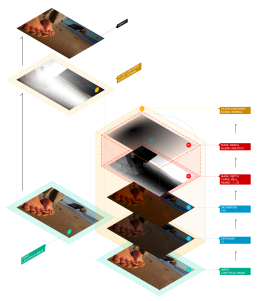

To support the basic data flow diagrams, I produced block diagrams as mock examples of how filters and filter containers would stack in the Depth ƒx UI.

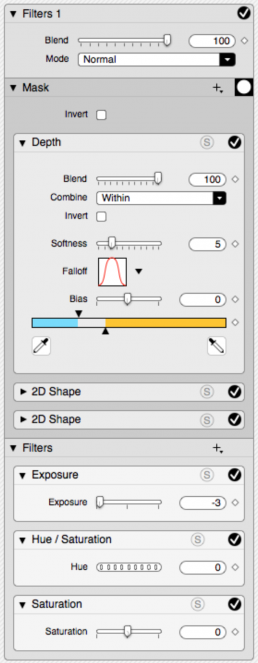

After engineering approved the data flow, I began work on wireframes for basic UI to show use cases combining masks and applying filters. This example shows a robust use case combining several masking techniques to isolate a section of the image within a single filter container. The filter container also has three filters which are being applied to the image.

Compositing

“Compositing” blends the results of one image with another. It includes a “mix” value to control the opacity of a given image in the calculation. In order to provide a sufficiently flexible toolset to the user, the notion of compositing was incorporated into Depth ƒx as the last stage of a filter container’s operation. It is how the filter container’s results are blended back with the state of the image prior to the current filter container.

Additionally, a common set of mathematical functions known as Composite Modes were provided for the user to blend the filter container with the previous results of the image. These modes are different mathematical functions which calculate the image composite in various ways producing very different results. The following set is an example of these modes which are in common use:

- Normal

- Add

- Screen

- Linear Dodge

- Color Dodge

- Multiply

- Linear Burn

- Color Burn

- Overlay

- Hard Light

- Linear Light

- Vivid Light

Masks

Masks are the means the user has to isolate a region of their image to manipulate with filters. After careful evaluation of possible masking techniques, the following mask types proved to be a pragmatic, effective, and flexible set, for region isolation:

2D Radial Falloff

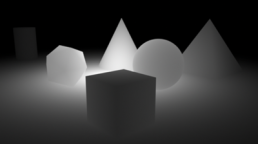

Using a simple radial falloff, the XY image regions can be effectively isolated for manipulation by the Depth ƒx filters. The in-view manipulator included handles for WYSIWYG editing.

Z Depth

Using the Depth Map as calculated from the lightfield image, the depth is clamped into a useful range to isolate the region of interest within the image.

3D Radial Falloff

By combining both a 2D area and zDepth clamped range, a spherical region can be isolated within the volume of the image, providing a masked region to manipulate the tonality. This practical approach of combining 2D and zDepth masks afforded an economy of implementation, and required the user to only consider 2 mask types and a combination of them to achieve this effect. The result is a flexible scheme for mask creation with a minimal set of tools for the user to learn.

Filters

The filters perform image manipulation and effects to the source image. The initial set of filters focused on editing image tonality and color. Image insert was included to provide a method for background replacement. Below are some examples of the initially developed filters:

Exposure

Contrast

Hue

Saturation

Colorize

Image Insert

Examples

Some example renderings from the Depth ƒX workflow. Some of the more experimental studies of the Russian Doll set were developed independently and do not strictly use the Depth ƒX compositing pipeline.